Server Load Balancing

Server Load Balancing distributes application traffic between network servers, so that all requests can be fulfilled in a timely manner. For example, if a client wants to connect to a website and his browser does not load within seconds he won't complain about "overloaded routers" in transit. He won't blame, that the web server, he is connecting to, has to serve 1000 other users at the same time. He problably would only complain about time and may chose to go somewhere else, where he will find similar information. By using Load Balancing options, the provider can make sure that content gets loaded as quick as possible and no bottleneck prevents that user for coming back. Additional features to protect and unload CPU / Memory Resources will help to speed up the process as well.

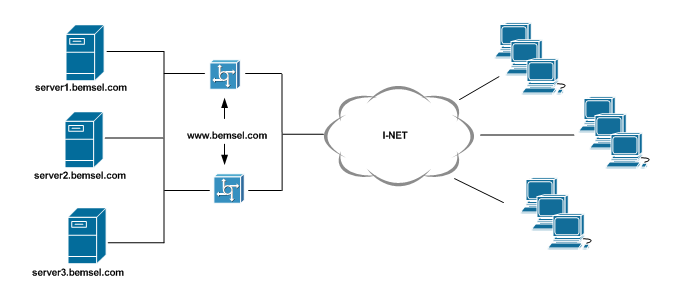

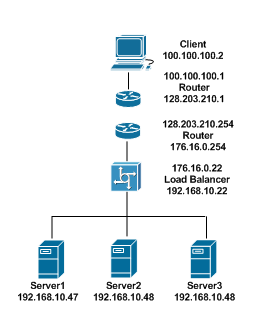

DNS entry of www.bemsel.com is tight to VIP of Load Balancer

Load Balancing improves network performance by distributing traffic efficiently so that individual servers are not overloaded by high impact of activities. There are many options how to provide Server Load Balancing and different vendors are using different algorithms and features to help customers.

The most common topologies are one-armed, .routed and transparent.

|

Typical

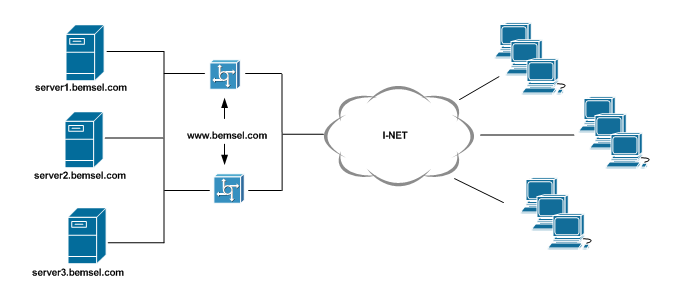

Traffic Flow Client 100.100.100.2 sends a request to www.bemsel.com. The DNS Entry of www.bemsel.com points to the load balancer public IP 128.203.210.22, which is the Red Side of the Load Balancer. The source of the packet is 100.100.100.2 with the destination of 128.203.210.22. When the Load Balancer gets the packet, it needs to choose the server and rewrites the packet to be destined for IP 192.168.10.47. The Source IP is still 100.100.100.2, so the server logs are accurate with the Client IP. Server 1 replies to the request with Source IP 192.168.10.47 destined to 100.100.100.2. Since the servers default Gateway is the Green Side of the Load Balancer, the packet will be handed over to 192.168.10.22. Now, the Load Balancer has to rewrite the source address from 192.168.10.47 to the Red Side IP Address of 128.203.210.22 and forwards the packet to the client. All the client knows, is the fact that it has sent a request to www.bemsel.com at 128.203.210.22 and has no idea what backend server did fulfill the request. If the client would have received the response from a backend server with corresponding source address, it would not have any idea what to do with it and will discard the packet |

|

One-Armed Mode For a one-armed configuration, all traffic passes over a single network interface. The Load Balancer accepts connections on a listening socket and holds this. It then creates a connection of its own to a backend server based on the load balancing policy. When it get the required data from the server, the Load Balancer sends it back to the client. Advantage: A One-Armed Mode is easy to deploy and perfect for evaluation, as I did with a jetNexus Virtual Appliance in my lab. Disadvantage: The server sees every request as a request from the load balancer and not the real client on the internet. If the traffic is HTTP based, the client's IP can be embedded in to a custom header for the server to collect stats. (x-forwarded-for) |

|

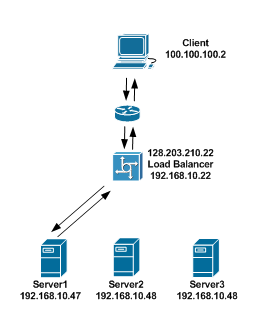

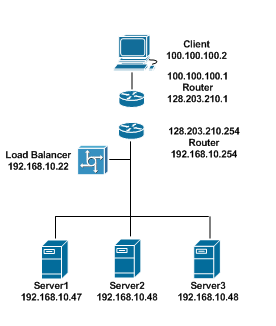

Two-Armed Routed Mode In a two-armed routed configuration all traffic between the client and the Load Balancer goes over the Red Side (176.16.0.22). All traffic between the Load Balancer and the Backend Server goes over the Green Side (192.168.10.22). Virtual server (176.16.0.22) has to sit on a different subnet than the real server. Load Balancer is configured as the default gateway for the Server 1, Server 2 and Server 3 Advantage: Backend Servers can be masked from the clients. Also additional filtering can be done on the Load Balancer Disadvantage: Server have to point their default route to the Load Balancer. Virtual IP Address has to be on a different subnet than the servers

|

|

Two-Armed Transparent Mode In a two-armed transparent configuration, the load balancer serves as a bridge. Virtual Server sits on the same subnet as the real servers, but physically separated by the load balancer. Advantage: Low design impact, as no new subnets need to be created. Disadvantage: The risk of creating a loop is high, so smart Spanning Tree configurations or only one link between servers and clients to avoid that risk.

|